Alright, let’s talk about something that seems super basic, but man, the headaches it can cause if you don’t really get it: using bits to represent data. I’ve been around the block a few times, and lemme tell ya, this ain’t just some textbook fluff. It hits you right in the middle of a project.

My Early Days: Blissful Ignorance

When I first started tinkering with computers, I just assumed stuff worked like magic. You type a letter, it appears. You save a number, it’s saved. I didn’t really stop to think how. It was all just… data. Who cared what was going on under the hood, right? As long as my program ran, I was happy. I guess I vaguely knew about 0s and 1s, but it felt very abstract, very far removed from the actual code I was writing.

The Painful Awakening: Running Out of Space

The first time this whole “bits” thing really bit me (pun intended, I guess) was on a project with a tiny microcontroller. We’re talking seriously limited memory. I was trying to store a bunch of sensor readings, and suddenly, my program was crashing, or the data was getting corrupted. I spent days, I tell you, days, pulling my hair out.

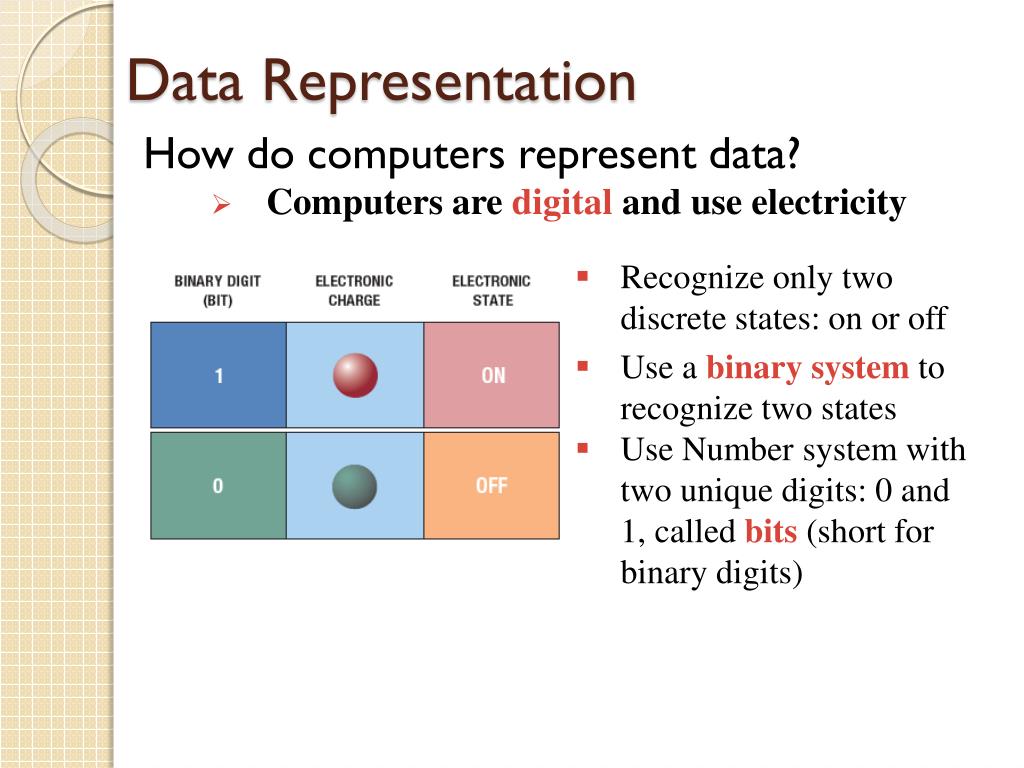

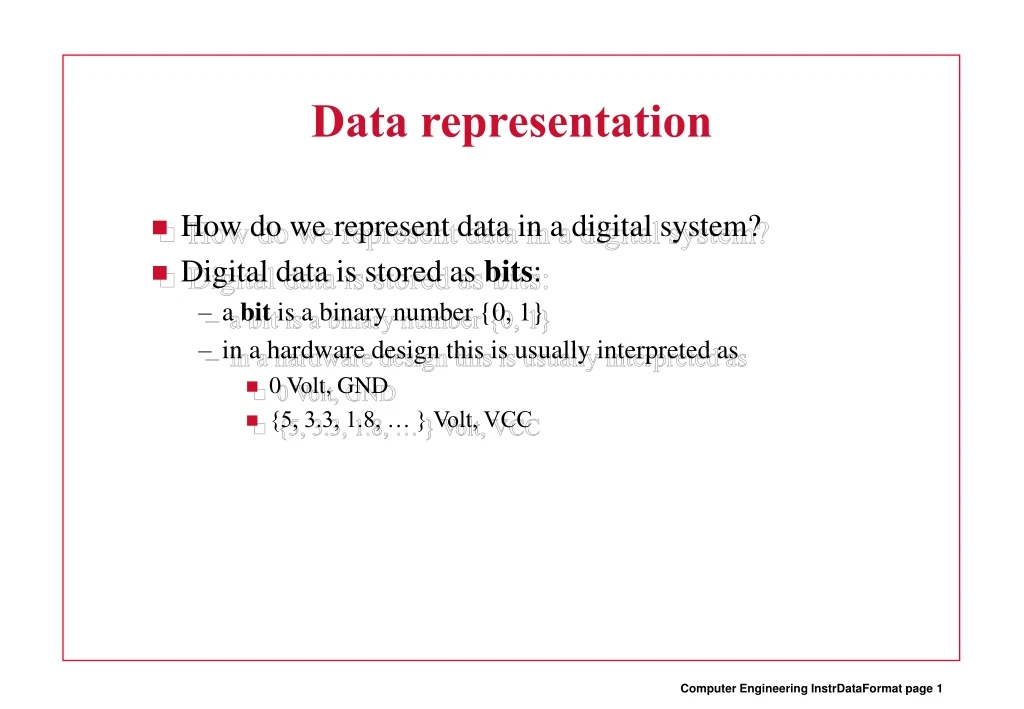

That’s when I had to get real friendly with the idea that everything, and I mean everything, boils down to these little things called bits. A bit is just a single point, a 0 or a 1. Like an on/off switch. That’s it. It’s the smallest piece of info a computer can handle. And computers, bless their hearts, they process all data as these bits.

Consequence Number One: Size Really, Really Matters

So, if you’ve only got a few bits, you can’t represent much. Think about it.

- With 1 bit, you have two options (0 or 1).

- With 2 bits, you get four (00, 01, 10, 11).

- With 8 bits – what folks often call a byte – you get 256 possible combinations.

This was a smack in the face. My sensor readings needed to be precise, but to save space, I was trying to cram them into too few bits. So, a reading of 25.5 degrees might become 25, or worse, if I wasn’t careful with how I was scaling things, it could wrap around and become something completely nonsensical. The consequence? My data was garbage because I didn’t have enough bits to say what I needed to say.

And text! Oh boy. I learned that even a single character, like the letter ‘A’, usually takes up 8 bits. That’s a whole byte just for one letter! So, when you’re trying to store a user’s name or a message on a device with hardly any memory, those bits add up scarily fast. Suddenly, “John Doe” isn’t just 7 characters; it’s 7 bytes, or 56 bits, plus maybe something to say “this is the end of the name.” It’s a lot when you’re counting every single bit.

Consequence Number Two: What Do These Bits Even Mean?

Then there’s the other fun part. A string of bits, say 01000001, doesn’t inherently mean anything. It’s just a pattern.

Is it:

- The number 65?

- The letter ‘A’ in ASCII (which, spoiler, it is)?

- A specific color in a pixel?

- An instruction for the computer to do something?

The computer doesn’t know unless you tell it how to interpret those bits. I remember one time I was reading data from a file someone else created. I thought it was a series of numbers. Turns out, it was text mixed with some special control codes. My program was spitting out the most bizarre outputs, and it took me ages to figure out I was telling the computer to read the bits the wrong way. It’s like trying to read a French book thinking it’s English – you’ll see letters, but you won’t get the story.

This means you have to be super careful about data types. If you save something as an integer (a whole number), you better read it back as an integer. If you save it as a floating-point number (a number with a decimal), don’t try to read it as plain text. The bits are the same, but their meaning, their consequence, changes entirely based on context.

Consequence Number Three: Limits, Limits Everywhere

Because you only have a finite number of bits for anything, there are always limits.

Range: If you use 16 bits to store a number, you can represent numbers from, say, -32,768 to 32,767 (if it’s signed). What if you need to store 50,000? Tough luck with those 16 bits. You need more bits. This is why you see things like `int`, `long`, `short` in programming – they’re different sized buckets for your numbers.

Precision: For numbers with decimal points, it’s even trickier. You can’t represent every possible fraction perfectly with a finite number of bits. So, 0.1 + 0.2 might not exactly equal 0.3 in computer land. It’ll be super close, but sometimes that tiny difference can cascade and cause big problems, especially in financial calculations or scientific stuff. I learned that the hard way when a balance sheet was off by a few cents, and it took a week to trace it back to floating-point weirdness.

So, What’s the Big Deal?

At the end of the day, understanding that data is just bits, and that how we use those bits has real consequences, changed how I approach problems. It makes you think harder about:

- Efficiency: Am I using more bits than I need? Can I pack this data tighter?

- Accuracy: Do I have enough bits to represent this information without losing important details?

- Interoperability: If I send this data to another system, will it understand what these bits mean? Are we agreeing on the encoding?

It’s not just academic. It’s about writing software that works, that’s reliable, and that doesn’t waste resources. So yeah, bits. They’re tiny, but their consequences are huge. Next time your program acts weird with data, maybe, just maybe, it’s down to how those humble 0s and 1s are being handled.