Alright, so today I finally got my hands dirty with this neural network stuff. Everyone talks about it, makes it sound super complicated. I figured, let’s just try the first baby step: making the data actually go through the network once. They call it the ‘forward pass’, right? Sounded simple enough.

Getting Started

So, I fired up my computer. Didn’t use any fancy frameworks yet, just plain old Python and NumPy because I wanted to see the numbers, you know? Had to set up the basic structure first. Felt like drawing a map before a road trip. Okay, needed some input data. Just made up a couple of numbers, like [0.5, -0.2]. Easy.

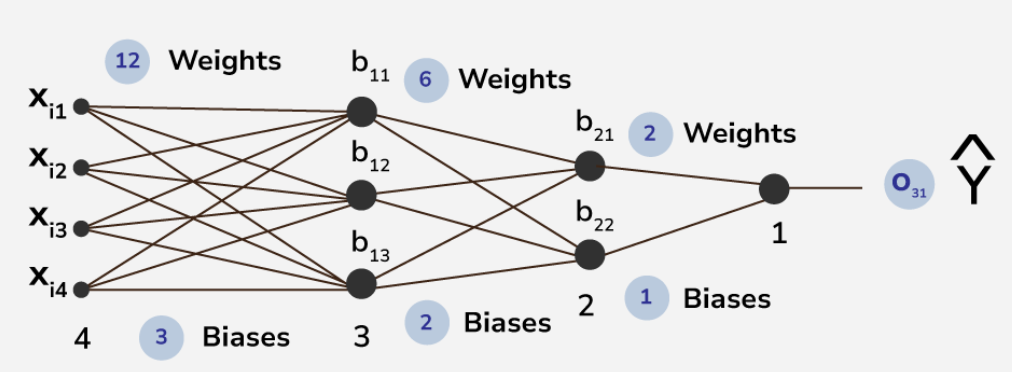

Then, the ‘network’ part. It’s just layers of pretend neurons. For this first try, I kept it tiny. Like, an input layer (that’s just my data), one hidden layer with maybe 3 ‘neurons’, and one output neuron. Seemed manageable.

Now, the tricky bit people mention: the weights and biases. Where do they come from? Well, for this first pass, you just kinda… make them up! Seriously. I just used NumPy to generate some random small numbers. It felt weird, like guessing the answers before you even see the question. But hey, that’s the starting point, apparently.

- Made some random weights for the connection between input and hidden layer.

- Made some random weights for the connection between hidden layer and output layer.

- Also sprinkled in some bias numbers, again, just random stuff for now.

Pushing the Numbers Through

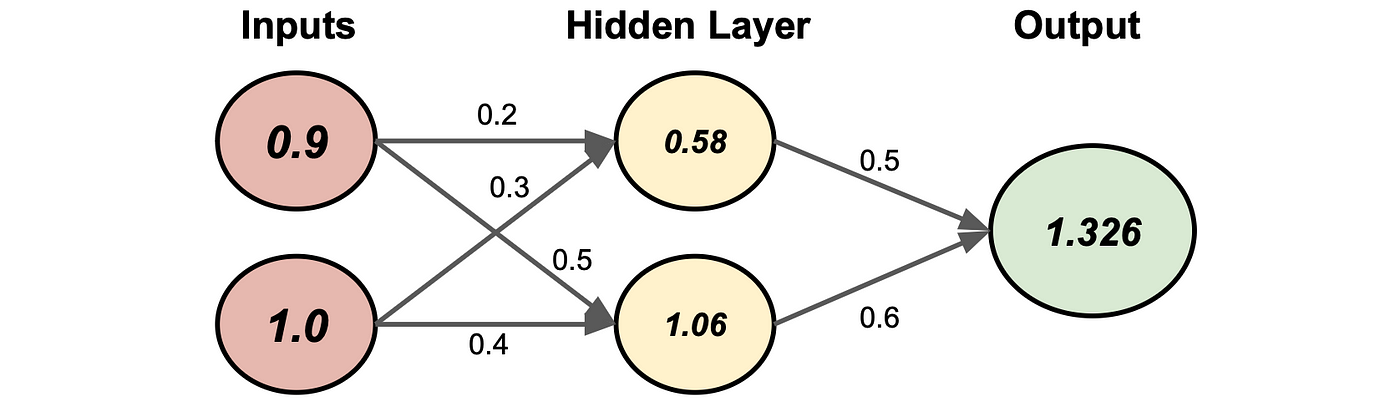

Okay, structure ready, numbers ready. Time to push ’em forward. First step: take my input numbers [0.5, -0.2] and multiply them by the first set of random weights I cooked up. Then, added the bias number for that layer. Got a new set of numbers.

But wait, there’s this ‘activation function’ thing. Gotta squash those results. I used the sigmoid one, the S-shaped curve thingy. Why? Because the tutorial I glanced at used it. Applied that to the numbers I just calculated. Felt like putting the numbers through a strainer.

Alright, numbers from the hidden layer? Check. Now, repeat the process for the output layer. Took the results from the hidden layer, multiplied them by the second set of random weights, added the output layer’s bias, and then… another sigmoid squeeze!

And the Result?

Boom! Out popped a single number. Something like 0.67 (I don’t remember exactly, doesn’t matter). And that was it. That was the famous ‘forward pass’. My data went in one end, got jumbled around with my random guesses, and a final number came out the other end.

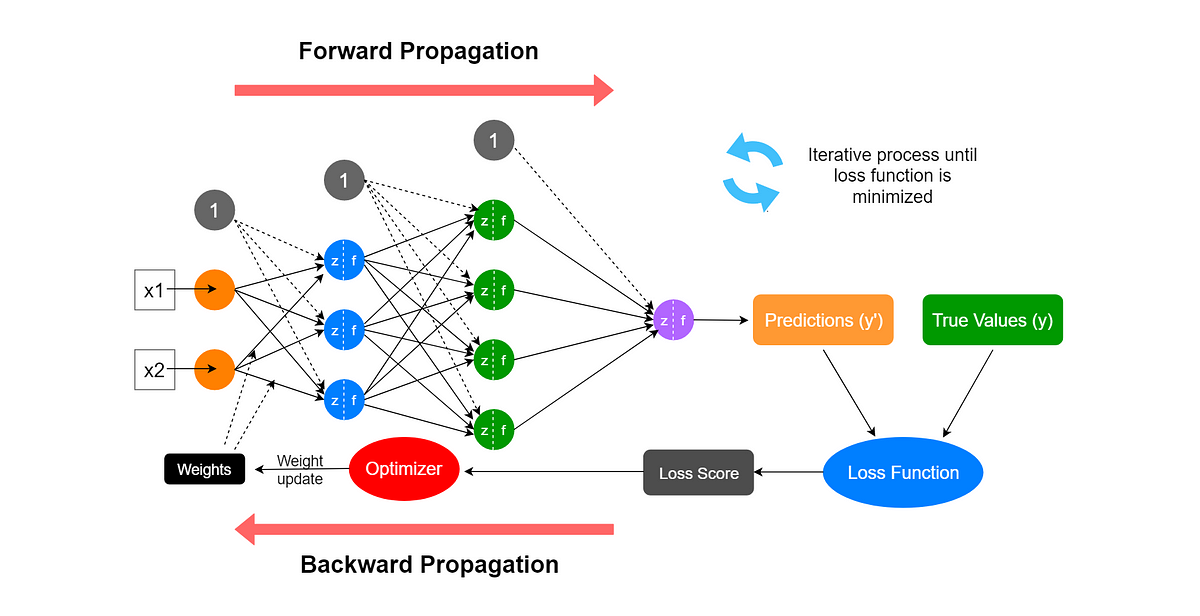

Honestly? It felt a bit… anticlimactic? Like, “Okay, I got a number. Now what?” It doesn’t mean anything yet, because all my weights and biases were just random shots in the dark. The network hasn’t learned anything; it just performed the calculation I told it to.

But still, it felt kinda cool. I actually built the pipes and pushed the water through, even if the pipes are leaky and pointing in the wrong direction right now. Seeing the numbers change at each step, doing the matrix multiplication (thanks NumPy, you saved me there), it made the concept less abstract. It’s just arithmetic, really, just… a lot of it, layered up.

Guess the next step is figuring out how wrong this 0.67 is compared to what it should be, and then somehow tweaking all those random numbers I started with. That sounds like the hard part. But for today, just getting the data to flow from start to finish feels like a decent win.